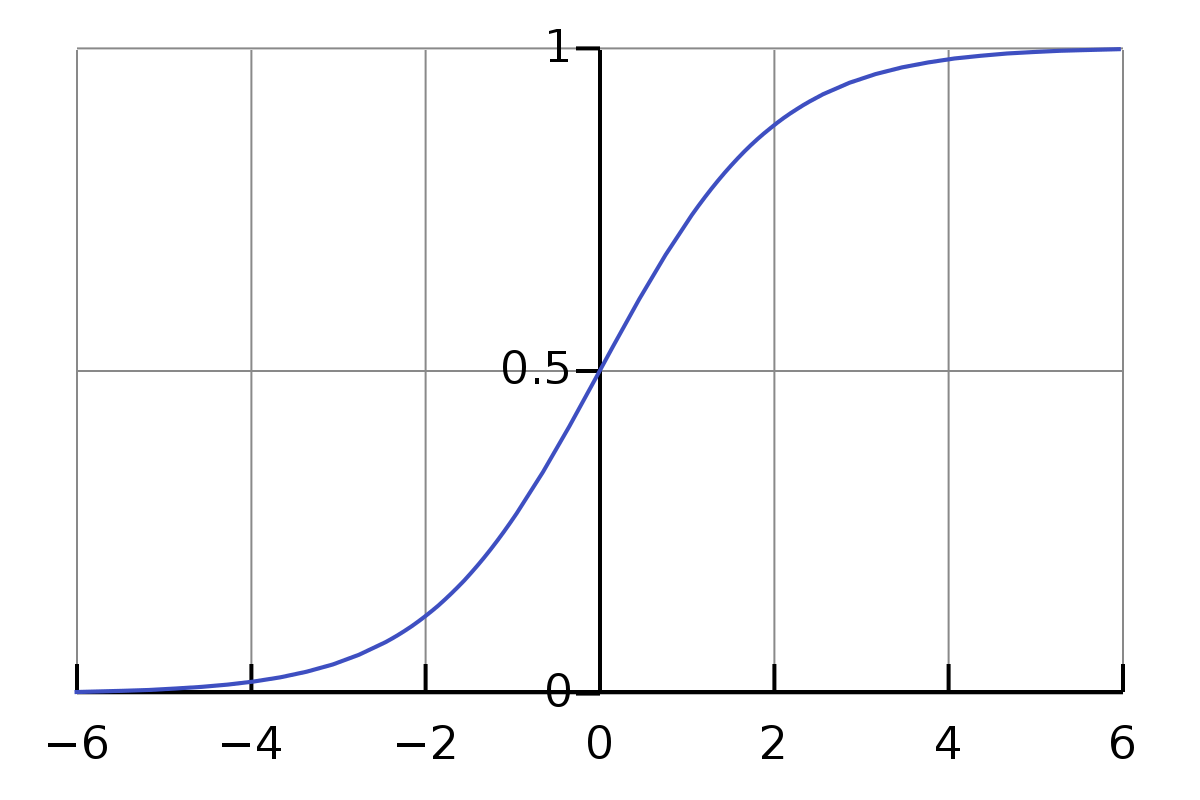

Sigmoid activations are a type of activation function for neural networks:

Some drawbacks of this activation that have been noted in the literature are:

- sharp damp gradients during backpropagation from deeper hidden layers to inputs

- gradient saturation

- slow convergence

References