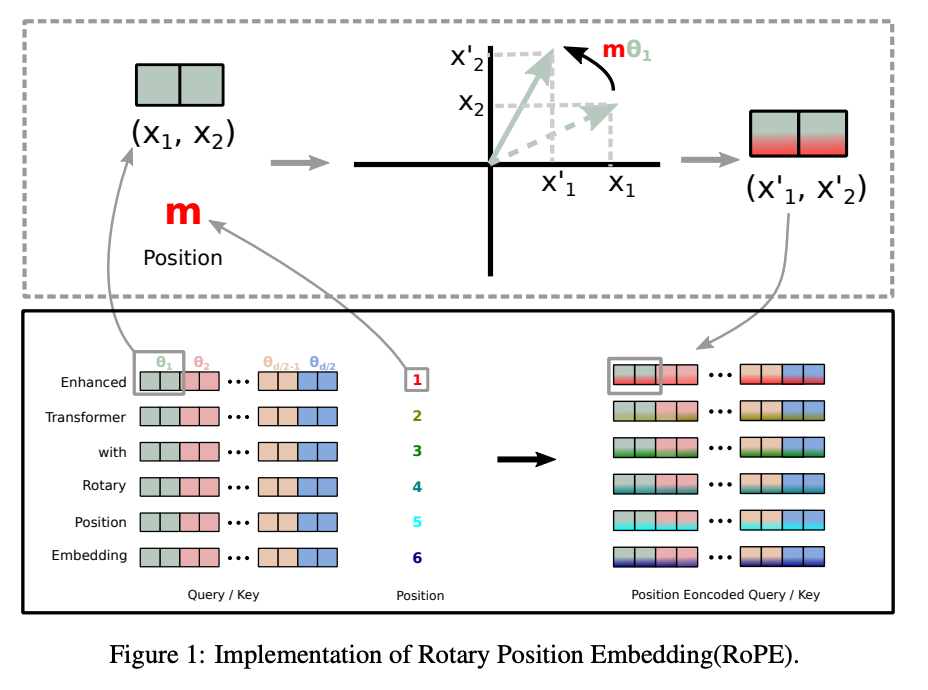

Rotary Position Embedding, or RoPE, is a type of position embedding which encodes absolute positional information with rotation matrix and naturally incorporates explicit relative position dependency in self-attention formulation. Notably, RoPE comes with valuable properties such as:

- the flexibility to be expanded to any sequence lengths

- decaying inter-token dependency with increasing relative distances

- the capability of equipping the linear self-attention with relative position encoding

References